Introduction

Welcome to the "Getting Started" guide for Vodafone Analytics on the Developer Marketplace. In this guide, we'll demonstrate how to quickly visualise and interpret a dataset generated by Vodafone based on the network usage of its customers (more information on how to request it can be found here). By exploring this example, you'll see the powerful insights that can be derived from data produced by our advanced analytics tools.

The dataset file reports footfall density at the population level, meaning it doesn't just count devices in the area. Instead, it employs sophisticated algorithms to estimate the total number of people passing through at the time, so there's no need to try and adjust these numbers yourself - they've already been expanded for you! Importantly, the data is presented in an aggregated format, ensuring that it is anonymised to protect individual privacy.

For this type of analysis, quadkeys are frequently used. If you're not familiar with them, think of quadkyes as a sequence of digits that represent different size tiles on Earth's surface. You can find some excellent resources on this topic in articles by Microsoft and MapBox.

The starter dataset is provided in industry-standard export format: a simple text/csv (comma separated values) file. This ensures compatibility with most common software packages. In this guide, we'll focus on the "footfall-measures" file, which provides a count of unique visitors across three London boroughs in July 2024.

Objective

In this article we will:

-

Explore the raw data file.

-

Create an aggregated set of values per tile.

-

Map the busiest locations

Let's get started!

Prerequisites

The analysis of datasets can be done using various tools across different technologies, all of which are effective. Given the small size of the starter dataset, the following will suffice:

-

Python 3.11

-

pip 22.4, the recommended package management system for Python that allow developers to install and manage software packages written in Python.

-

The starter dataset requested from here and unzipped into your working directory. This guide uses the “footfall-measures_20240701_20240731_starter.csv” file.

If you have access to a larger dataset (e.g., national data or multiple months), you might want to consider using PySpark or a Big Data platform, or an appliance running Elastic MapReduce (EMR).

Setup

To begin, we will create and activate a virtual environment, install Jupyter Notebooks and then install the necessary libraris.

#Create a virtual environment....

python3 -m venv vfa

#Run the activation script to configure the shell environment

source vfa/bin/activate

#Install the jupyter notebook using pip3

pip3 install notebook

#Install the required libraries....

pip3 install pandas geopandas mercantile shapely matplotlib contextily folium plotly

#Run jupyter notebooks

jupyter notebookExploratory Data Analysis

After the last line from the previous code was executed, a new webpage should have opened up, listing the contents of the current folder. On this page, click on the ”New” button and select the “Python 3 (ipykernel)" option, as depicted on the image below.

Figure 1: opening a new Python 3 file

Now, we need to open the file and examine its size and shape. The dataset is divided into quadkeys level 17 tiles. Since quadkeys IDs can have leading zeros, it's crucial to load them as strings rather than numeric fields to ensure accurate location representation. After loading, we'll convert the "measure_value" column to a numeric format. The code below accomplishes that:

import pandas as pd

import geopandas as gpd

import mercantile

from shapely.geometry import Polygon

import matplotlib.pyplot as plt

import contextily as ctx

import foliumfrom folium.plugins import HeatMap

import plotly.express as px

# Specify the path to your CSV file

CSV_FOOTFALL_FILE = './footfall-measures_20240701_20240731_starter.csv'

CSV_FOOTFALL_AGG_FILE = './footfall-footfall-measures_20240701_20240731_starter-agg.csv'

EXPORT_HEATMAP_FILE = './vfa_heatmap.html'

EXPORT_CHOROPLETH_FILE = './vfa_choropleth.html'

# Read the CSV file into a DataFrame

# Set dtype to str for all columns except 'measure_value'

df = pd.read_csv(CSV_FOOTFALL_FILE, dtype=str)

df['measure_value'] = pd.to_numeric(df['measure_value'])

# Display the first few rows of the DataFrame

print(df.head())Once that is done, click on the "Run" button to execute the script, in the toolbar:

Figure 2: toolbar

The output should look like this:

dst_id dst_unit time_aggregation measure_name measure_value

0 03131313110332311 QK17 2024-07-23 08 unique_visitors 1986

1 03131313110332311 QK17 2024-07-22 21 unique_visitors 2691

2 03131313110332311 QK17 2024-07-23 01 unique_visitors 2119

3 03131313110332311 QK17 2024-07-23 12 unique_visitors 2627

4 03131313110332311 QK17 2024-07-28 01 unique_visitors 2362

The "dst_unit" field indicates the measurement unit for a particular destination ID, in this case, quadkey 17 (QK17), which measures approximately 1.1943 meters per pixel. This means that the tiles are quite detailed, allowing for precise mapping and location identification.

The starter file contains a measure called "unique_visitors" meaning that when a device enters an area multiple times, it is only counted once. This field can contain other values which represent different measurements being provided, of which unique visitor count is the most popular measure. The "time_aggregation" field represents the period under study, which for this dataset is July 2024.

To explore the time dimensions included in the file, use the following code:

# How many time aggregations are in the file

print('There are', df['time_aggregation'].nunique(), 'time dimensions in this file.')

# Show the first 5 entries

df['time_aggregation'].unique()[:5]Which should generate the following output, after the script is run by pressing the button, same as previous coding:

There are 775 time dimensions in this file.

array(['2024-07-23 08', '2024-07-22 21', '2024-07-23 01', '2024-07-23 12',

'2024-07-28 01'], dtype=object)These dimensions represent aggregates for each hour in July 2024. 775 is the number of hours in the month (31*24 = 744) plus the number of daily values (31). We will add a measure_granularity field (daily or hour) derived from the time_aggregation field. Then we will remove the daily values and work with the hourly values (744 rows):

# Infer daily or hourly measurement (daily are yyyy-mm-dd = 10 characters)

df['measure_granularity'] = df['time_aggregation'].apply(

lambda x: 'daily' if len(x) == 10 else 'hour'

)

# Filter out daily values

df = df[df['measure_granularity'] != 'daily']

# How many time aggregations do we now have (expect 744)

print('There are', df['time_aggregation'].nunique(), 'time dimensions now available.') And here is the output:

There are 744 time dimensions in this file.Given its size, this dataset can be quite demanding when processed on a laptop. The steps below will create an aggregate profile for each tile and save it in a different file, which will be used for the initial analysis.

The code snippet below calculates the minimum, maximum, and average footfall over the entire month for each quadkey. Note you can not simply sum the hourly values together to create a daily. Imagine you have the same people at the location for the entire 24 hour period, summing the values would give you the wrong value and this is why a daily unique visitor count is provided in the dataset.

# Creates aggregates for minimum, maximum, mean (average)

df_agg = df.groupby('dst_id', as_index=False).agg(

measure_min=('measure_value', 'min'),

measure_max=('measure_value', 'max'),

measure_avg=('measure_value', 'mean')

)

# Convert the mean average to a whole number

df_agg['measure_avg'] = df_agg['measure_avg'].round(0)

# Save the file to disk

df_agg.to_csv(CSV_FOOTFALL_AGG_FILE, index=False)Identifying and Plotting Top Locations

Using the file generated by the previous code, the following steps will extract the top 10 locations and plot them on a map.

To begin, some helper functions are needed when dealing with quadkeys. The functions below create the polygon/bounding box for the tiles and provide the latitude and longitude located at the central point of the tile:

# Helper function to calculate the quadkey boundary.

# Function to calculate the centroid of a quadkey

def quadkey_to_lat_lon(quadkey):

# Convert quadkey to tile

tile = mercantile.quadkey_to_tile(quadkey)

# Get the bounding box of the tile

bounds = mercantile.bounds(tile)

# Calculate the center of the tile

lat = (bounds.north + bounds.south) / 2

lon = (bounds.east + bounds.west) / 2

return lat, lon

# Function to convert a quadkey to a polygon

def quadkey_to_polygon(quadkey):

# Convert quadkey to tile coordinates

tile = mercantile.quadkey_to_tile(quadkey)

# Get bounding box for the tile

bounds = mercantile.bounds(tile)

# Create a polygon from the bounding box

return Polygon([

(bounds.west, bounds.south), # Bottom left

(bounds.west, bounds.north), # Top left

(bounds.east, bounds.north), # Top right

(bounds.east, bounds.south), # Bottom right

(bounds.west, bounds.south) # Close polygon

])

# Function to calculate the best map zoom level given a dataframe of latitude and longitude points

def map_zoom_level(df):

# Calculate the range between map locations

lat_range = df['latitude'].max() - df['latitude'].min()

lon_range = df['longitude'].max() - df['longitude'].min()

#Determine a suitable zoom level, adjusting the constant factor as needed

if max(lat_range, lon_range) < 1:

zoom_level = 10 # Higher zoom level for small area

elif max(lat_range, lon_range) < 5:

zoom_level = 6 # Medium zoom level for medium area

else:

zoom_level = 4 # Lower zoom level for large area

return zoom_level+1Now, calculate the centroid latitude and longitude and store them in the dataset along with the geometry of the quadkey (a polygon). These will be used later when the polygons are plotted on maps.

# Create the extra supporting columns used for mapping

df_agg[['latitude', 'longitude']] = df_agg['dst_id'].apply(quadkey_to_lat_lon).apply(pd.Series)

# Creating a new column with geometry data

df_agg['geometry'] = df_agg['dst_id'].apply(quadkey_to_polygon)Next, identify the top 10 tiles by average footfall and maximum footfall:

# Top 10 busiest tiles by their average footfall

df_top_10_avg = df_agg.sort_values(by='measure_avg', ascending=False).head(10)

# Top 10 busiest tiles by their maximum footfall

df_top_10_max = df_agg.sort_values(by='measure_max', ascending=False).head(10)

# View the dataframe for top 10 average

df_top_10_avgThe output will show the following:

- Top 10 by average footfall

dst_id measure_min measure_max measure_avg latitude longitude geometry

981 03131313111232022 4806 83226 35277.0 51.516434 -0.130463 POLYGON ((-0.1318359375 51.51557978375592, -0....

1254 03131313113001233 5116 68560 33165.0 51.495920 -0.144196 POLYGON ((-0.14556884765625 51.49506473014369,...

1011 03131313111232220 6423 81998 30680.0 51.511307 -0.130463 POLYGON ((-0.1318359375 51.510451886248575, -0...

1012 03131313111232221 4666 85729 30605.0 51.511307 -0.127716 POLYGON ((-0.12908935546875 51.510451886248575...

905 03131313111223300 2411 75081 30510.0 51.514725 -0.141449 POLYGON ((-0.142822265625 51.513870548723986, ...

935 03131313111230032 10920 55692 30391.0 51.530106 -0.124969 POLYGON ((-0.1263427734375 51.5292513551899, -...

848 03131313111222312 9873 55678 29952.0 51.513016 -0.157928 POLYGON ((-0.1593017578125 51.512161249555156,...

918 03131313111223331 5578 74285 28956.0 51.511307 -0.133209 POLYGON ((-0.13458251953125 51.510451886248575...

1343 03131313113010210 2689 64119 28828.0 51.501049 -0.124969 POLYGON ((-0.1263427734375 51.50019435946634, ...

890 03131313111223201 3463 65314 28711.0 51.514725 -0.149689 POLYGON ((-0.15106201171875 51.513870548723986...

# View the dataframe for top 10 maximum

df_top_10_max- Top 10 by maximum footfall:

dst_id measure_min measure_max measure_avg latitude longitude geometry

1012 03131313111232221 4666 85729 30605.0 51.511307 -0.127716 POLYGON ((-0.12908935546875 51.510451886248575...

981 03131313111232022 4806 83226 35277.0 51.516434 -0.130463 POLYGON ((-0.1318359375 51.51557978375592, -0....

1011 03131313111232220 6423 81998 30680.0 51.511307 -0.130463 POLYGON ((-0.1318359375 51.510451886248575, -0...

905 03131313111223300 2411 75081 30510.0 51.514725 -0.141449 POLYGON ((-0.142822265625 51.513870548723986, ...

1102 03131313111322030 3843 74654 28089.0 51.518144 -0.081024 POLYGON ((-0.0823974609375 51.51728895465185, ...

918 03131313111223331 5578 74285 28956.0 51.511307 -0.133209 POLYGON ((-0.13458251953125 51.510451886248575...

855 03131313111222332 1476 71575 8043.0 51.509597 -0.157928 POLYGON ((-0.1593017578125 51.50874245880333, ...

1099 03131313111322021 1384 70050 23579.0 51.518144 -0.083771 POLYGON ((-0.08514404296875 51.51728895465185,...

1061 03131313111233131 1417 68938 20672.0 51.518144 -0.089264 POLYGON ((-0.09063720703125 51.51728895465185,...

1254 03131313113001233 5116 68560 33165.0 51.495920 -0.144196 POLYGON ((-0.14556884765625 51.49506473014369,...Plotting quadkeys on a Map

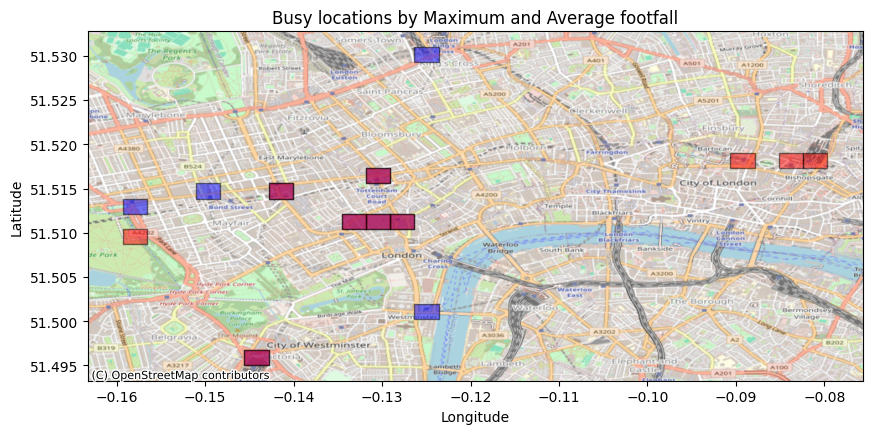

Simply looking at a quadkey ID does not provide much information about its location. In this next step, we'll plot both the top 10 locations for average and maximum footfall on a map. To do this, the top 10 dataframes will be converted int GeoDataFrames. The EPSG:4326 coordinate system (also known as WGS84) will be used. This system is commonly used in online maps.

gdf_avg = gpd.GeoDataFrame(df_top_10_avg, geometry='geometry', crs='EPSG:4326')

gdf_max = gpd.GeoDataFrame(df_top_10_max, geometry='geometry', crs='EPSG:4326')

# Plot the polygons on a map

fig, ax = plt.subplots(figsize=(10, 10))

# Add the geo dataframes to the plot (blue=avg, red=max)

gdf_avg.plot(ax=ax, color='blue', edgecolor='black', alpha=0.5)

gdf_max.plot(ax=ax, color='red', edgecolor='black', alpha=0.5)

# Add some labelling

plt.title('Busy locations by Maximum and Average footfall')

plt.xlabel('Longitude')

plt.ylabel('Latitude')

# Optionally, add a basemap for better visualization

try:

# Use OpenStreetMap as a basemap provider

ctx.add_basemap(ax, crs=gdf_avg.crs, source=ctx.providers.OpenStreetMap.Mapnik)

except ImportError:

print('contextily is not installed, skipping basemap.')

plt.show()This map will display the top 10 average and maximum footfall locations:

Figure 1: plotted quadkeys for the top 10 average and maximum footfall

The locations shown on the map are some of the most popular spots in London.

Summary

Congratulations! You've completed this guide for exploring and mapping the Vodafone Analytics starter dataset. Starting from the raw file, we went over the meaning of its fields, how to trace quadkeys into polygons and finally, how to plot a subset of them in a map. On the next instalment, we will dive further into the analysis of the dataset and go over a few additional information that can be extract from it.

Be sure to check out future guides and blog posts to see how other companies and academia are using these valuable data sources.

Until then, enjoy your coding.